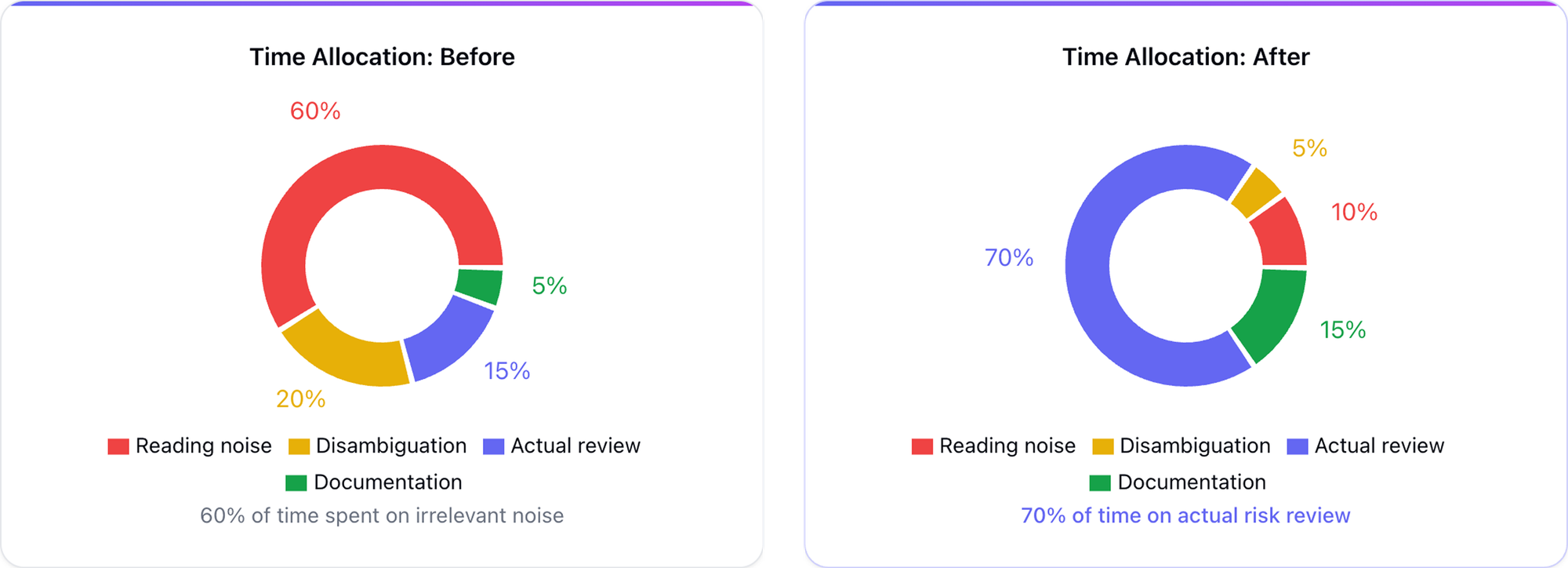

Compliance operations are under sustained pressure. Regulatory expectations continue to expand, while many KYB workflows remain dependent on manual data collection, fragmented tooling, and repeated customer follow ups. The result is longer time to decision, higher cost per case, and uneven outcomes across analysts, regions, and business units.

This dynamic has shifted KYB from a purely risk discipline into an operational and auditability challenge. Scaling through additional headcount can temporarily increase throughput, but it often compounds cost, variability, and governance friction. A more durable approach is workflow redesign, with automation focused on evidence capture, standardization, and repeatability.

Evidence first workflows instead of task switching

In many organizations, KYB execution still follows an analyst-driven assembly process. Evidence is gathered across multiple sources, screenshots are captured, and narratives are written late in the cycle. This model is slow, difficult to audit, and sensitive to individual analyst judgment.

AI agents enable a different operating model. The workflow prioritizes structured, evidence-linked outputs early, then routes exceptions and decisions to human reviewers. The practical value is not generic automation. It is the conversion of unstructured inputs into consistent, reviewable artifacts that support audit readiness.

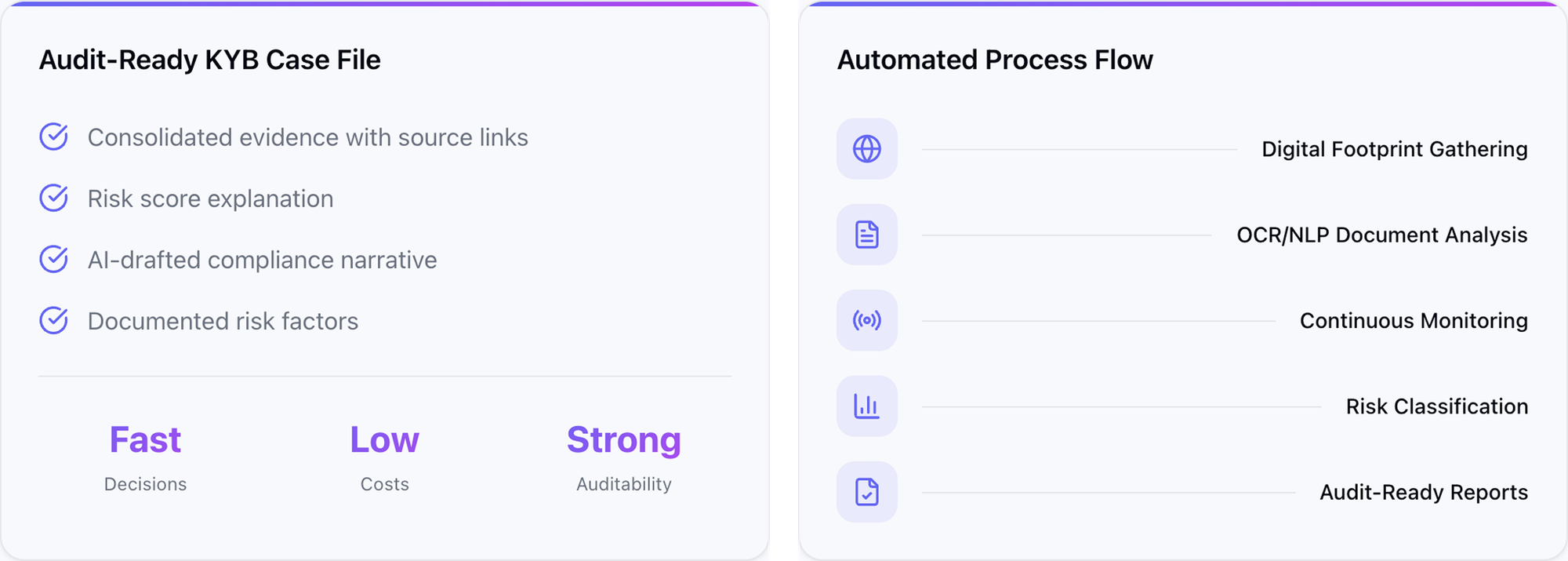

A mature AI-enabled KYB workflow typically integrates:

- Entity resolution and data consolidation

- Document intelligence (OCR and natural language processing)

- Screening and adverse media analysis with disambiguation

- Evidence-linked reporting with traceable rationale

- Continuous monitoring and change detection

Where KYB bottlenecks concentrate

Across teams and jurisdictions, bottlenecks tend to repeat in predictable areas:

- Unstructured web presence signals that require manual interpretation

- Document review that triggers iterative requests for information

- Adverse media noise, duplication, and name ambiguity

- Inconsistent country and industry tiering across policies and analysts

AI agents create measurable impact when they reduce variability and manual effort in these areas while preserving human accountability for final decisions.

Web presence checks: high signal, high labor

Web presence analysis is often essential for assessing operational credibility and consistency with declared activity. Registries and customer-submitted documents rarely provide sufficient context on real-world operations, digital footprint, or market presence. Analysts therefore triangulate public signals such as website availability, contact consistency, official social profiles, third-party listings, and other indicators of business activity.

This work is labor-intensive because the evidence is not standardized. AI agents improve throughput by converting web presence into structured evidence categories, linking findings to sources, and generating concise rationales that can be reviewed and retained as part of the case file. The operational benefit is reduced time spent on manual browsing and ad hoc documentation.

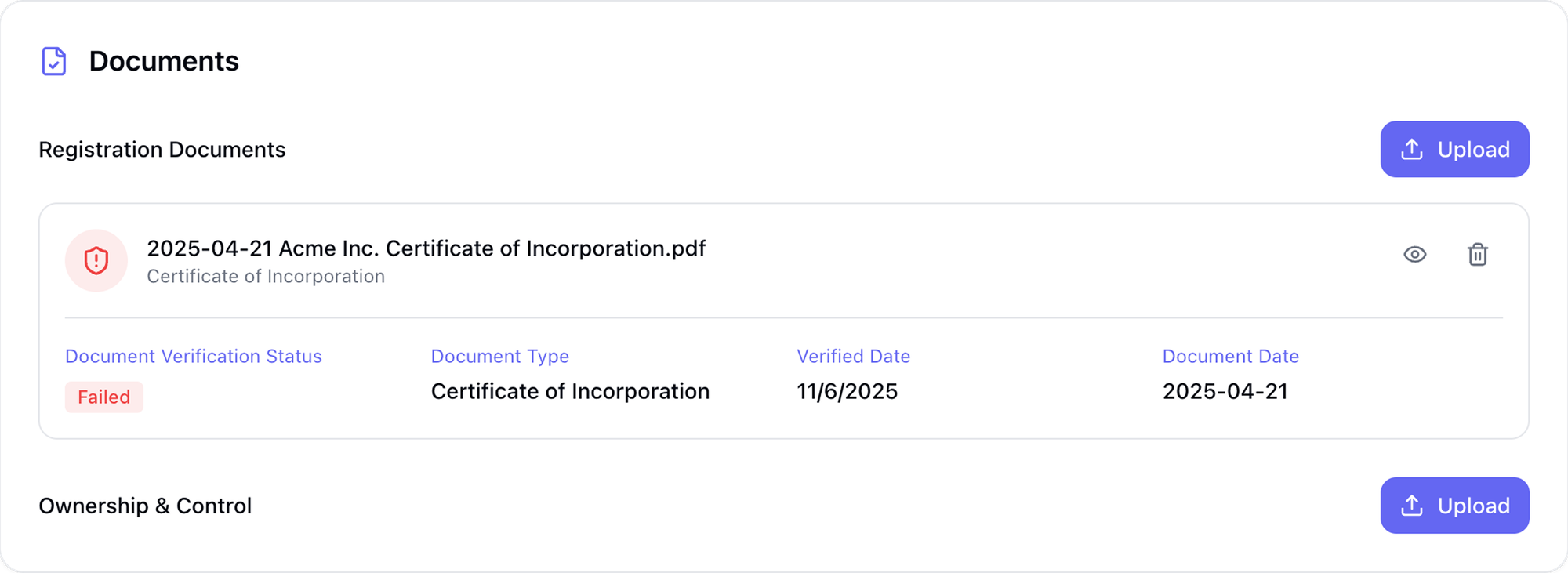

Document handling and RFI loops: a primary driver of cycle time

Document collection and verification frequently determine total onboarding duration. Corporate KYB cases may require incorporation documentation, ownership and control records, proof of address, director and UBO identification, and additional materials based on risk tiering. Submissions are often incomplete or inconsistent, leading to repeated requests for information and rework.

AI agents reduce this burden by extracting key fields, validating internal consistency, and reconciling documents against registry data where available. The output should be an exception-focused review package:

- What was extracted and verified

- What is missing or expired

- What does not match declared information

- What requires escalation

This approach shifts analyst time from repetitive extraction to higher-value review and decision-making.

Adverse media screening: reducing noise while preserving auditability

Adverse media screening is important for identifying risks not captured by formal lists, including allegations of fraud, regulatory actions, litigation, and reputational events. However, traditional keyword-based searches generate large volumes of low-quality results. False positives can be substantial, and duplication across syndicated sources increases manual review time. Name ambiguity and transliteration further increase the operational burden.

AI agents improve adverse media workflows by standardizing the process:

- Collecting relevant coverage across prioritized sources

- De-duplicating and clustering content into distinct events

- Ranking results by relevance to the entity and associated individuals

- Labeling events by risk theme and severity

- Linking evidence back to original sources for traceability

This produces a reviewable event-based record rather than a flat list of articles, supporting both operational efficiency and audit requirements.

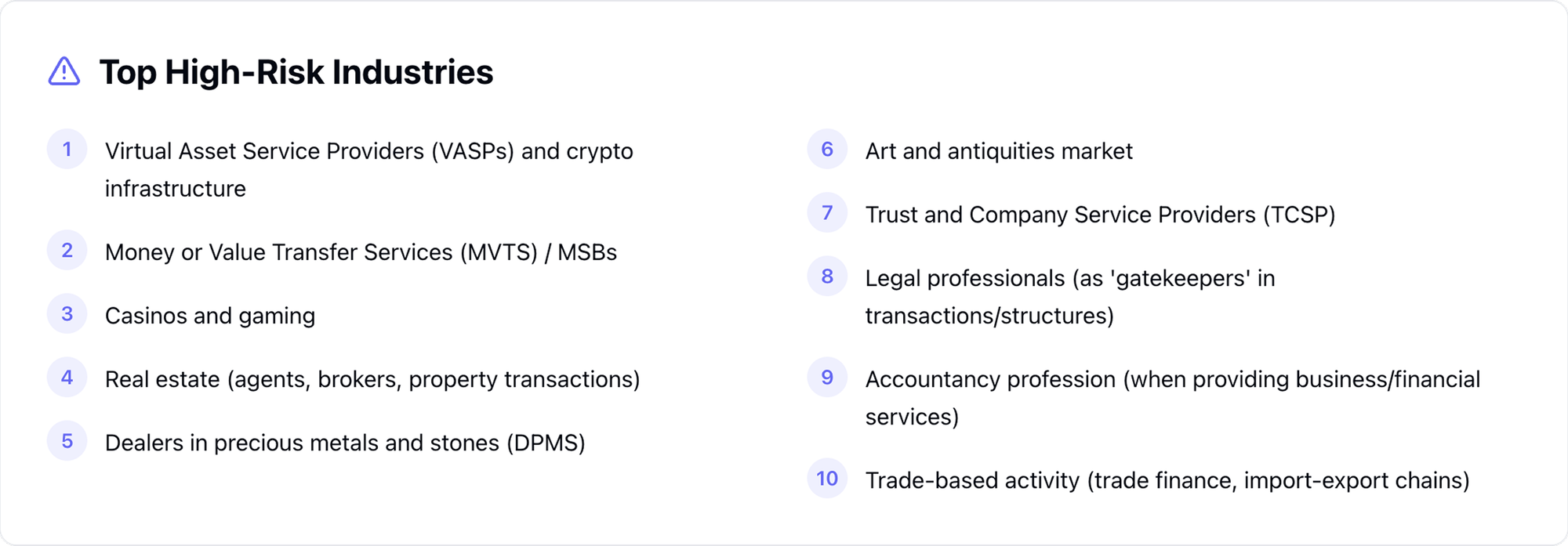

Country and industry risk tiering: improving consistency through contextual evidence

Country and industry tiering often lacks consistency because institutions rely on different reference indicators and apply different interpretations. This variability increases governance overhead, creates unnecessary escalations, and can lead to uneven scrutiny across comparable cases.

AI agents help by adding contextual evidence to classification decisions rather than relying only on static labels. For country exposure, this can include incorporation and registration jurisdictions, declared operating countries, sanctions exposure, adverse media signals, and public geographic indicators. For industry classification, the agent can compare declared activity against website content, public positioning, customer signals, and document evidence, then flag mismatches and provide a rationale for review.

The objective is repeatability. A tier and explanation should be re-runnable when inputs change, enabling consistent re-rating over time.

What audit-ready output should include

For AI agents to be operationally credible in KYB, outputs should align with audit expectations and internal governance. An audit-ready case file typically includes:

- Consolidated evidence with source links

- Documented risk factors and exceptions

- Clear rationale behind the resulting risk assessment

- A structured narrative suitable for review and sign-off

- An activity log that supports traceability and internal controls

This model does not remove accountability from compliance teams. It reduces manual effort where judgment is not required, and it strengthens consistency where governance demands repeatable logic.

About Scoreplex

Scoreplex KYB AI-Coworker is an AI-powered KYB workflow that assembles a standardized, audit-ready case file end-to-end, from business identity and digital footprint to documents and a final due diligence narrative.

It builds a structured company baseline, consolidates web presence into a single evidence pack, and manages documents and questionnaires with clear statuses and traceable source links.

Registry, UBO, sanctions & PEP: Enriches the baseline with registry data, maps ownership and control to identify UBOs and related parties, and runs sanctions/PEP screening with evidence-linked sources.

Web presence check: Normalizes website, domain, social, third-party profile, and review signals into consistent categories with source links. Document verification: Extracts KYB fields via OCR/NLP, cross-checks against documents, registries (where available), and questionnaires, and returns an exception list of gaps and mismatches.

Adverse media analysis: Collects broadly, deduplicates and ranks results, reduces name-collision noise, and clusters coverage into risk-labeled events with evidence-linked sources.

Due diligence narrative: Generates an AI-drafted, report-ready narrative that explains the risk outcome and cites the evidence trail.

AI agent constructor: Lets teams configure workflows, checks, and outputs to their needs while preserving an audit-ready trail.

The output is one consistent case file per counterparty, reducing manual assembly and speeding reviews by focusing analysts on exceptions rather than collection.

Download the full white paper

This blog post is a condensed version of the full document. The complete white paper expands on each bottleneck, includes supporting references and examples, and outlines evaluation criteria for evidence-first KYB automation.

Download the full PDF here: